Response Control

Choose the structured response you need from the three options aggregation, aggregation result and natural language answer.

Retrieve the aggregation only or let Florentine.ai directly execute the aggregation and retrieve the aggregation result.

Florentine.ai can also return the result as a natural language answer.

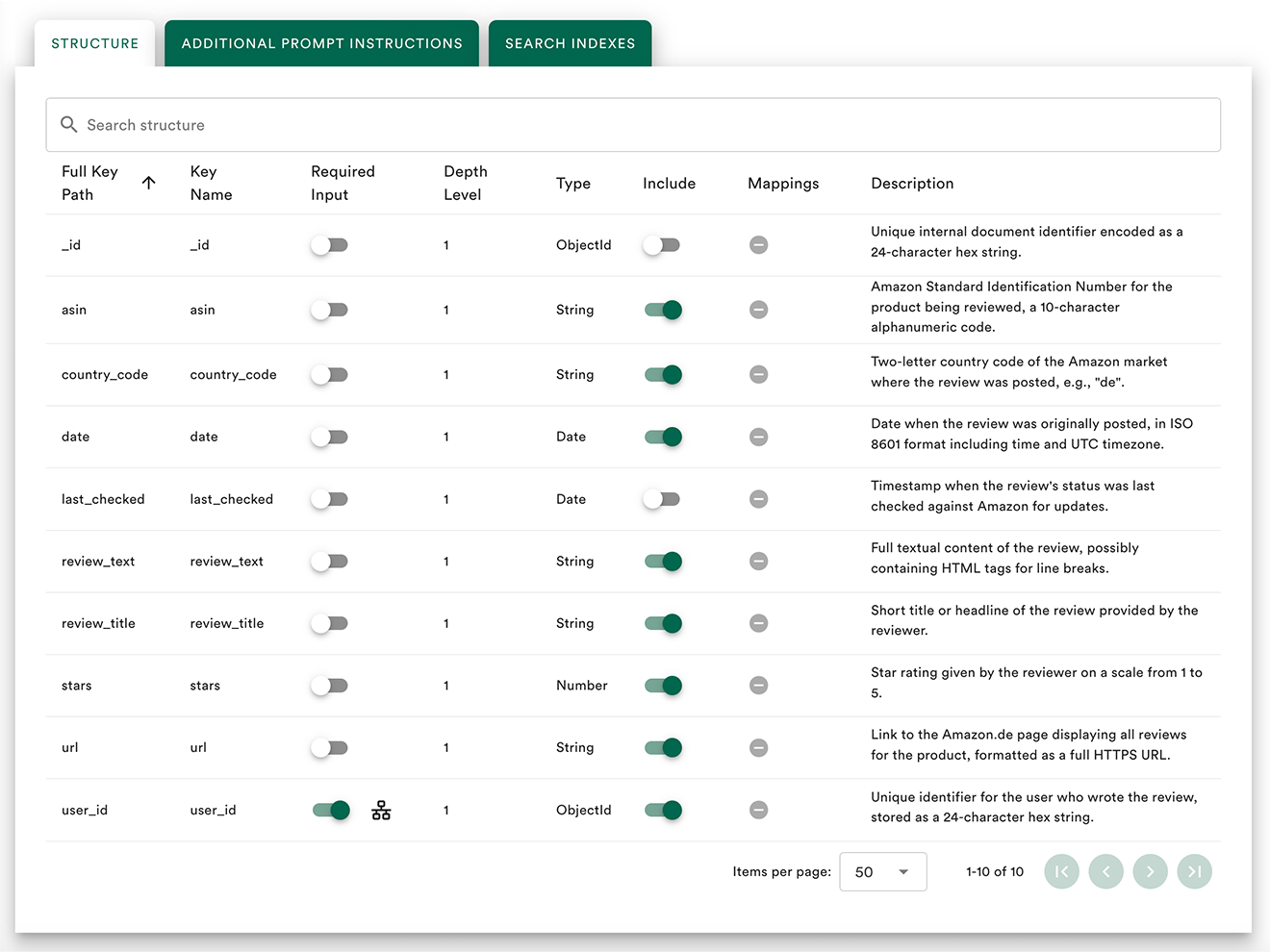

Schema Exploration

Florentine.ai automatically explores and analyzes the schema of your collections.

Thus Florentine.ai enables the LLM to understand the structure of your data and generate accurate, schema-aware aggregation pipelines without manual configuration.

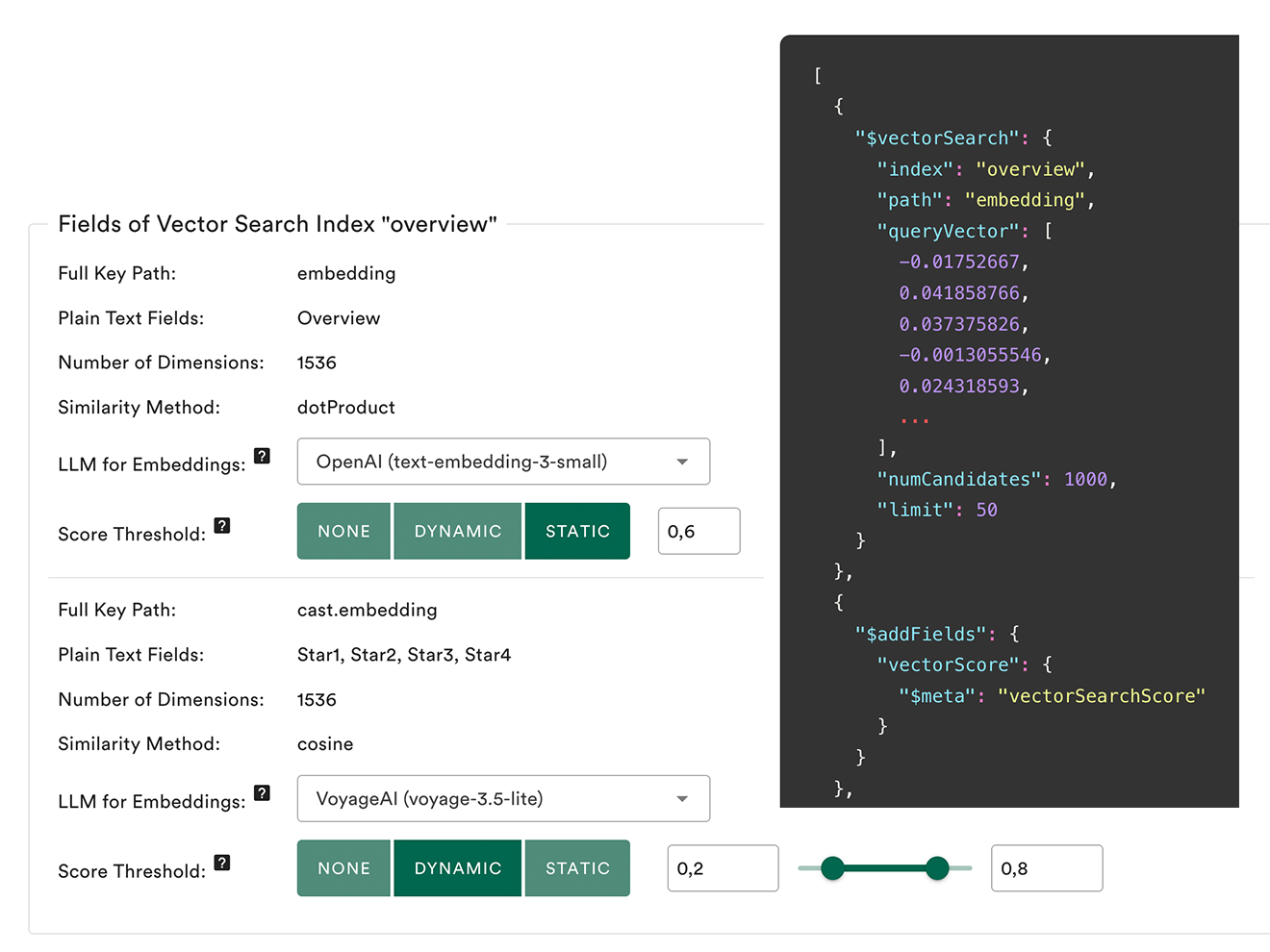

Vector Search / RAG

Do semantic searches with automated embedding creation on your data for advanced RAG (Reality Augmented Generation) support.

Define score thresholds to fine tune your vector searches and return only the data you expect.

Secure Data Separation

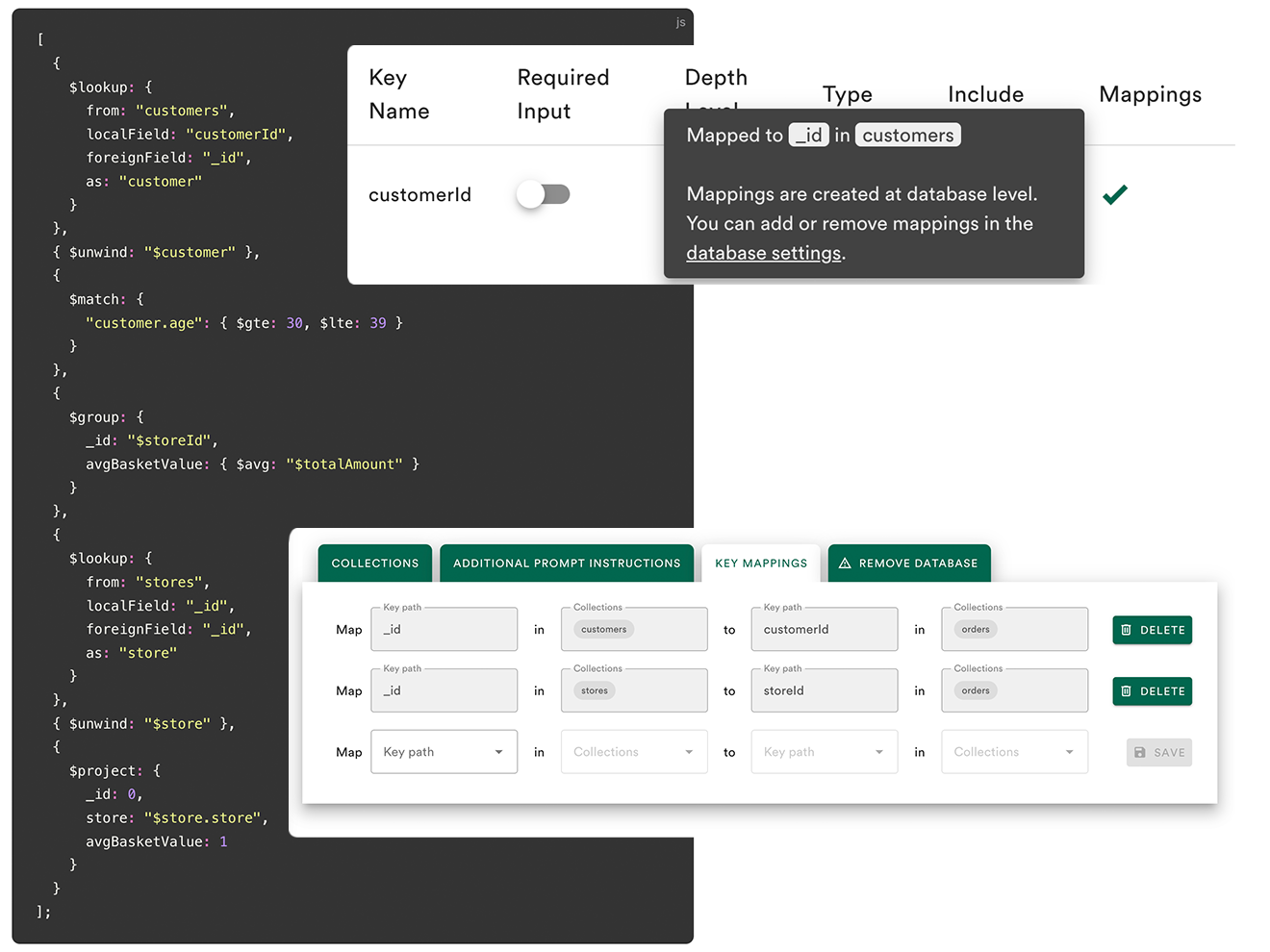

Florentine.ai ensures key/value based isolation of data for multi-tenant usage, i.e. user-based data separation.

The internal transformation layer modifies aggregation pipelines to ensure secure data separation without relying on the LLM to do so.

Use the Required Inputs feature to define which fields will be added to the pipeline.

MCP Server and API

Use the Florentine.ai MCP Server or the Florentine.ai API to integrate natural language to MongoDB data in your project.

Integration is straightforward and well-documented. In case of any issues we will be glad to assist you.

Advanced Lookup Support

Complex questions can easily be answered with various lookup stages spanning across multiple collections.

Lookups work out of the box and are created automatically.

For edge cases where connected fields are not evident at first sight, you can manually assist the AI by interconnecting collections by its keys via the Mappings feature.

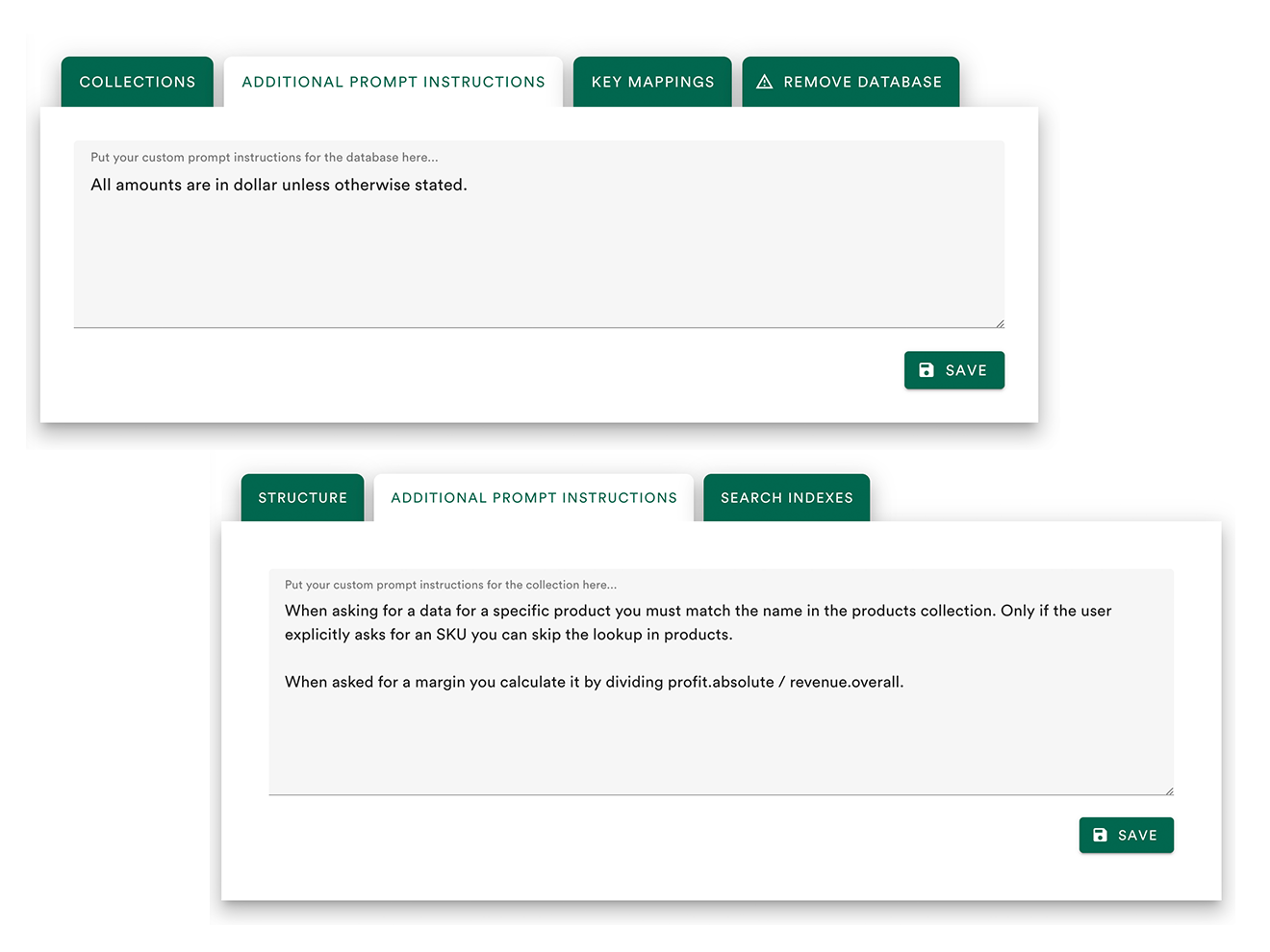

Custom Prompt Instructions

Better control over behavior for specific use cases by adding custom prompt instructions.

Custom prompts can be added on database or collection level.

* Will generate additional costs per MCP/API request with your LLM provider (OpenAI, Anthropic, Google and Deepseek are supported). Prices are exclusive of VAT where applicable.

No, because we offer a fully free account! It has all the features of the pro and enterprise accounts. The only limitations are the number of monthly requests and parallel active databases and collections.

So just test with the free account and when you are satisfied and need more power in terms of monthly requests and/or active collections you just upgrade your account.

"Bring your own key" means you add your own API key from the LLM provider of your choice to Florentine.ai. Currently we support OpenAI (ChatGPT), Anthropic (Claude), Google (Gemini) and Deepseek. That API key will be used for the aggregation requests.

The costs per request vary depending on the provider, the number of active collections and the quality settings. The approximated costs per request can be found on your Florentine.ai dashboard.

Florentine.ai works well with all four of these LLM providers: OpenAI (ChatGPT), Anthropic (Claude), Google (Gemini), Deepseek. However, as the most balanced LLM between quality and speed we generally recommend Anthropic (Claude).

Yes, that's how Florentine.ai is supposed to be used. Integration into your own AI agent or desktop AI app is very straightforward via our MCP server or via our API.

While we recommend to use the playground for testing purposes we do not recommend to use it as the general point of entry. The playground is not an AI agent and will not offer the full experience.

While the MongoDB MCP server focuses on administrative tasks and simple raw query responses, Florentine.ai's features work for end user interactions with the database.

With features like secure data separation, out of the box RAG/Vector Search support, detailed collection structure analysis, key exclusions, automated aggregation execution and many more, Florentine.ai is the perfect choice for natural language querying of MongoDB databases.

Our "Required Inputs" feature allows you to define keys that always have to be present in aggregations created by Florentine.ai. This means you will have to pass a value to a key when using the MCP or API, e.g. a "userId".

The internal transformation layer of Florentine.ai ensures these values (eg. the ID of the user) are included in the aggregation without relying on the LLM to do so. Thus we can ensure that only data is returned the user should have access to.

Yes. We can offer a short live demo of our service, just drop us a line to demo@florentine.ai and we will shortly get back to you.

However you can also easily test the functionality yourself. Signup is free and the setup can be done in a coffee break.

Florentine.ai automatically detects vector search indexes and embeddings (and the model used for creating these) in your collections.

Florentine.ai then modifies the aggregation pipeline by replacing the text query with the actual embedding of the query using the right embedding service and model.

You may also define individual score thresholds for each collection inside the search indexes settings in your Florentine.ai account.